🔍 Overview

What good is data if you can't interpret it and make actionable decisions? The field of data visualization covers a wide breadth of topics including data collection, querying, classification, and of course, visualization. No one is more important than the other, and it is an interesting challenge to determine what the best way to present data is, as it is highly subjective.

This course provides covers many topics and languages (Javascript, SQL, Hadoop (Java), and involves implementing methods from scratch as well as using existing APIs. More specifically, methods to run queries, D3.js for designing charts and interactive visualizations, and even Python for implementing PageRank. There is a open-ended group project where you must cover a few of the topics and it endures over the whole semester.

*I took this course Fall 2019, contents may have changed since then

🏢 Structure

- Four assignments - 50% (of the final grade)

-

Group Project - 50%

- Includes report, updates, presentation, implementation

Assignments

You get a month to complete each assignment, which are project-styled, where you generate figures, use high level APIs, and implement algorithms.

- Collecting & Visualizing data - SQLite, D3 warmup, testing out OpenRefine

- Graphs and Visualization - D3.js in depth to visualize data, graphs, and relations

- Big Data Tools - Hadoop, Spark, Pig and Azure

- Machine Learning Algorithms - Implementing PageRank, random forests, and using sklearn

Group Project

Throughout the semester, you make progress on an open ended group project. The required components include a large, real, dataset, analysis and/or computation performed on it, and a user interface to interact with your algorithm.

My group worked on a song recommendation system based on the Spotify API.

📖 Assignment 1 - Collecting & Visualizing Data

Assignments in this course are more-so experimenting with many different frameworks, so instead of introducing course concepts I will just go over the results.

Rebrickable is a catalogue of Lego parts with an API that allows you to search for parts and find relations between Lego sets. For this part, we use HTTP Requests to fetch specified parts, and then form relations between the components to create a node network, represented in gexf. Apologies, I am missing all graphics for this assignment.

There is also a SQL exercise, where you complete queries according to the instructions.

For example:

-- (a.ii) Import data

-- [insert your SQLite command(s) BELOW this line]

...

-- (d) Finding top level themes with the most sets.

-- [insert your SQL statement(s) BELOW this line]OpenRefine is a tool for data cleaning and transformation. It operates on a spreadsheet interface, and provides intelligent feedback on data and allows for powerful functions.

For example, you can cluster names and merge categories, which can be effective for inconsistent inputs for your dataset

For this portion of the assignment, we were given steps to complete for a dataset using OpenRefine.

📖 Assignment 2 - Graphs and Visualization

D3.js (Data-Driven Documents) is a JavaScript library used for creating interactive graphs and visualizations. Specifically, it allows you to bind data to your browser DOM and apply operations to that data. D3 abstracts away the UI manipulations required.

It allows very low level controls, enabling you to customize every aspect of your output. For example, this is an approach to add an X axis label.

svg.append("text")

.attr("transform",

`translate("${width/2},${height + 45})`)

.style("text-anchor", "middle")

.attr('font-size', '22px')

.text("Year");This can be quite cumbersome compared to using matplotlib defaults from Python, but there is rich community support and many examples shared.

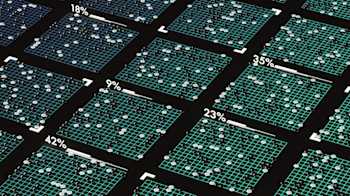

Here are some outputs for the assignment

.gif#medimage)

.gif#medimage)

📖 Assignment 3 - Big Data Tools

Hadoop MapReduce is a framework for processing data in parallel across many systems. The data is split into map tasks, which are all done in parallel, and then the outputs from each are input to the reduce tasks.

A simple example is a word counter program. Given a large number of text files, the task is to provide a count for each word. The mapper would go word by word and maintain a count. The reducer would then combine and sum the outputs to produce a final count.

We used MapReduce (in Java) to process a graph. There are source and target nodes, and our task is to find the max weight for each source to target. For example, this is the graph and output.

// Graph

src tgt weight

10 110 3

10 200 1

200 150 30

100 110 10

110 130 15

110 200 67

10 70 3

// Output: src, (target, weight)

10 70,3

200 150,30

100 110,10

110 200,67The second question of the assignment involved analyzing a large graph with Spark/Scala on Databricks. We use Scala notebooks, which function similarly to Jupyter notebooks, but in this case, run on Databricks servers. We apply a variety of operations to a large dataset of questioners and answerers, such as listing by month, the number of Q/A pairs.

We also touch over Azure's Machine Learning Studio, which is a visual-flow-based machine learning pipeline. Here is the same tutorial we start with.

📖 Assignment 4 - Machine Learning Algorithms

In the final assignment, we implement and experiment with a few machine learning algorithms, including page rank, decision trees + random forests, and some of the popular classifiers with sklearn.

Page Rank is the original algorithm that powered the ranking of Google Search results. The importance of a website is weighted by the number and quality of links that point to that page.

The implementation from the lectures, personalized page rank, iterates through the graph multiple times to update the weights of each node (page).

represents the value of node v at iteration t

is the dampening factor (0 to 1)

is the probability of a random jump to a preferred node (personalized aspect)

is every node connected to the node v

is the number of outbound links on the node

What does this all really mean? Essentially, nodes with a high number of connections will increase in value, from the component. This will continue to propagate, as the nodes with many edges will then increase importance to nodes it connects to more-so than nodes with fewer edges. adds personalization by increasing value by a configurable amount- for example, if you express interest in a certain product, then related links can be weighted higher.

We also implement a random forest learner from scratch. There are many great resources out there already as it is a popular topic, so I won't touch more on that.

With sklearn, we go over the standard steps with training and testing a model: loading data, preprocessing, training, and testing over a few of the built-in classifiers. We also go over grid search for hyperparameter tuning, which is a brute force method to scan through a range of hyperparameters and selecting the best performing one. For a more in depth review of this topic, I'd recommend this Kaggle notebook.

💻 Project - Song Recommender

Our group created a song recommendation and visualization website. Given an input seed song, we provide not just a list of recommended songs, but the reasons why they are recommended, as well as further details about song features and the artist.

Our song database was scrapped using the Spotify API. We created edges between similar songs based on their artist, as well as to songs with the most similar audio features (provided by the API).

The recommendations were built on 300k songs, but any song on Spotify could be searched and added to the database. While taking the course, we hosted this on AWS so other students could interact with it.

⏩ Next Steps

Many languages, frameworks were used. I will confidently say that after the course, I did not necessarily feel comfortable with any of them. But I wouldn't say that's the point of these assignments or course. Rather, it is to get your feet wet, and allow enough exposure to understand what you want to pursue and take a deeper dive on your own. Although I feel this is similar for most courses, it is especially relevant here, as modern tools enables quick demo applications in this field.